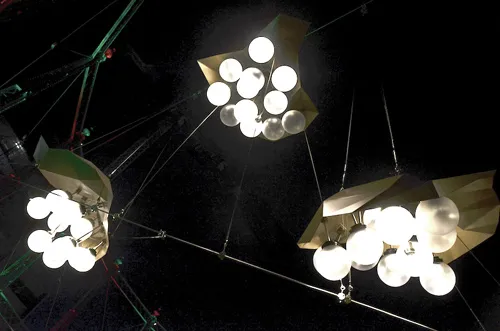

The Gasworks project is an interactive art installation I’ve been involved with, which loosely mimics brain cells as clusters of lights. Webcams are used to detect motion and organically alter lighting sequences of ten different sculptures (or neurones), each suspended on steel cabling above a public amphitheatre.

Artist Michael Candy wanted the installation and lighting sequence to look as analog as possible, with the whole thing to reacting according to the speed of movements detected. Unfortunately this ruled out using the simple PIR (passive infrared) sensors typically found in security systems; these have a single output pin that is either off (no motion) or on (motion detected), and weren’t capable of giving us any insight into the amount of activity associated with any detected motion.

I eventually settled on using a webcam and a computer vision algorithm called optical flow, a technique often found in optical computer mice. I used the implementation found in OpenCV, which was really easy to integrate into Golang with cgo.

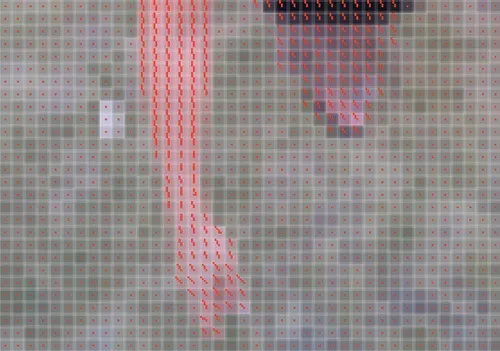

Optical flow returns back an array of vectors, one for each pixel captured by the webcam. The magnitude and direction of these vectors indicate how that pixel “moves” compared to the previous frame in the video stream.

To calculate the magnitude of a detected movement, I simply summed all the movement vectors that came out of optical flow algorithm, calculated the overall length (magnitude) and scaled it down so that frames with loads of movement had an ‘energy’ of 0.1 while frames with no movement had an ‘energy’ of 0.0.

func calcDeltaEnergy(flow *C.IplImage, config *Configuration) float64 {

var i C.int

var dx, dy float64

// Accumulate the change in flow across all the pixels.

totalPixels := flow.width * flow.height

for i = 0; i < totalPixels; i++ {

value := C.cvGet2D(unsafe.Pointer(flow), i/flow.width, i%flow.width)

dx += math.Abs(float64(value.val[0]))

dy += math.Abs(float64(value.val[1]))

}

// average out the magnitude of dx and dy across the whole image.

dx = dx / float64(totalPixels)

dy = dy / float64(totalPixels)

// The magnitude of accumulated flow forms our change in energy for the frame.

deltaE := math.Sqrt((dx * dx) + (dy * dy))

fmt.Printf("INFO: f[%f] \n", deltaE)

// Clamp the energy to start at 0 for 'still' frames with little/no motion.

deltaE = math.Max(0.0, (deltaE - config.MovementThreshold))

// Scale the flow to be less than 0.1 for 'active' frames with lots of motion.

deltaE = deltaE / config.OpticalFlowScale

return deltaE

}

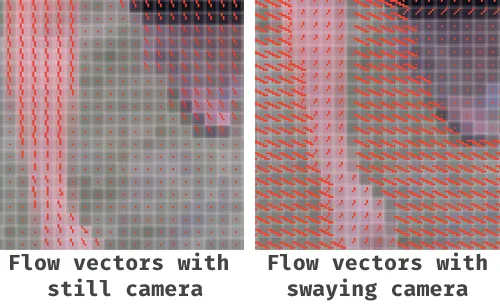

It was here that we ran into a little problem. The sculptures are suspended on steel cable rigging, and sway in the wind. The algorithm was getting confused, and a gentle sway in the wind would be falsely detected as people moving around, thus changing the lighting sequence.

I first tried sticking an accelerometer to the webcam, and using readings from that to compensate for when the camera was swaying in the wind. This turned out to be a “Bad Idea"™, mostly because of the latency between getting accelerometer sensor readings and matching it with the right frame of video. But also it added a considerable amount of complexity, and needless to say everyone was relieved when I worked out a software approach that didn’t need any additional hardware.

I realised that when the webcams and sculptures are still, only parts of the image have vectors, indicating detected motion. However, when the sculptures and cameras are swaying in the wind, the whole image has vectors, indicating a general trend - the direction in which the camera is moving.

To work out the general direction in which the camera was moving, and to image stabilise the optical flow algorithm, I first worked out the mean movement vector for the frame and subtracted that from each movement vector (clamping at zero).

func calcDeltaEnergy(flow *C.IplImage, config *Configuration) float64 {

var i C.int

var dx, dy, mx, my float64

totalPixels := flow.width * flow.height

// Determine mean movement vector.

for i = 0; i < totalPixels; i++ {

value := C.cvGet2D(unsafe.Pointer(flow), i/flow.width, i%flow.width)

mx += float64(value.val[0])

my += float64(value.val[1])

}

mx = math.Abs(mx / float64(totalPixels))

my = math.Abs(my / float64(totalPixels))

// Accumulate the change in flow across all the pixels.

for i = 0; i < totalPixels; i++ {

// Remove the mean movement vector to compenstate for the sculpture that might be swaying in the wind.

value := C.cvGet2D(unsafe.Pointer(flow), i/flow.width, i%flow.width)

dx += math.Max((math.Abs(float64(value.val[0])) - mx), 0.0)

dy += math.Max((math.Abs(float64(value.val[1])) - my), 0.0)

}

// average out the magnitude of dx and dy across the whole image.

dx = dx / float64(totalPixels)

dy = dy / float64(totalPixels)

// The magnitude of accumulated flow forms our change in energy for the frame.

deltaE := math.Sqrt((dx * dx) + (dy * dy))

fmt.Printf("INFO: f:%f m:[%f,%f]\n", deltaE, mx, my)

// Clamp the energy to start at 0 for 'still' frames with little/no motion.

deltaE = math.Max(0.0, (deltaE - config.MovementThreshold))

// Scale the flow to be less than 0.1 for 'active' frames with lots of motion.

deltaE = deltaE / config.OpticalFlowScale

return deltaE

}

It took a bit of tweaking, but in the end, the stabilising approach worked great and compensates for all but the most violent gusts of wind. The structural engineers have predicted the sculptures will experience 80cm of lateral movement in 100km/h wind gusts (a 1 in 5 year storm event). I’m actually really keen to see how the sculptures sense and react to a big subtropical storm - I reckon it would be a pretty awesome light show!

Comments:

You can join the conversation on Twitter or Instagram

Become a Patreon to get early and behind-the-scenes access along with email notifications for each new post.

Hi! Subconsciously you already know this, but let's make it obvious. Hopefully this article was helpful. You might also find yourself following a link to Amazon to learn more about parts or equipment. If you end up placing an order, I make a couple of dollarydoos. We aren't talking a rapper lifestyle of supercars and yachts, but it does help pay for the stuff you see here. So to everyone that supports this place - thank you.